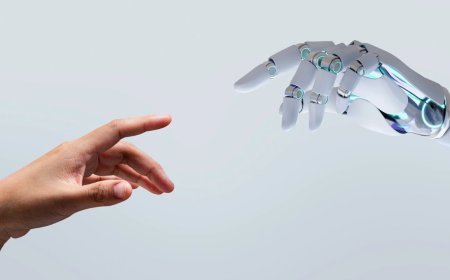

Why the U.S. May Soon Regulate AI Like the EU — and What That Means for You

Why the U.S. May Soon Regulate AI Like the EU — and What That Means for You

This summer brought a stark warning: Chinese-developed AI model DeepSeek sparked alarm in Congress. Senators demanded an investigation into its data security, fearing it may compromise user information or aid foreign entities. This isn’t about politics or gossip—it’s about the very real stakes of AI governance—and it shows just how urgently the U.S. now needs rules.

AI Regulation USA — From Caution to Urgency

Gone are the days when AI policy meant slogans like “move fast, break nothing.” Today, legislators across the spectrum are moving swiftly. Take Illinois: it passed legislation that bans AI-powered mental health tools from acting without licensed oversight. Simultaneously, the TAKE IT DOWN Act mandates the rapid removal of AI-generated intimate images—no more delays.

This surge in action marks a shift from “self-regulation is enough” to “oversight must be real, comprehensive, and immediate.”

EU AI Act Explained — Europe’s Regulatory Blueprint

Europe wasted no time. The EU AI Act, effective since August 2024, is the world’s first sweeping AI law. It sorts AI tools into four risk levels—from minimal to unacceptable—and outright bans those that pose the highest threats. AI systems used in hiring, health, and credit are labeled “high risk,” requiring rigorous testing, human oversight, and complete transparency. Non-compliance? We're talking fines of up to €35 million or 7% of global revenue.

This is a structured, preemptive model—designed to govern AI before it turns problematic, not after.

AI Laws in America — Patchwork or Precursor?

Here in the U.S., we don’t have a unified AI law yet—but that doesn’t mean nothing is happening.

-

The FTC has already started cracking down on AI used for false reviews.

-

Illinois prioritized mental health safety; Texas pushed for business innovation.

-

Other states—California, New York, Utah—are drafting bills requiring AI transparency, bans on non-consensual deepfakes, oversight in hiring, and labeling of AI-generated content.

The result? A hot mess of state-by-state laws that may confuse businesses and fragment innovation—unless federal action steps in soon.

Tech Policy News — Political Stakes Rising

Recent headlines spotlight a crossroads in AI policy:

-

A proposed national AI moratorium—intended to pause state regulation for years—was stripped from a major Senate bill by a near-unanimous 99–1 vote. States will keep their authority to regulate AI. This signals strong resistance to over-centralizing power.

-

Meanwhile, Connecticut AI experts are backing a bipartisan federal bill to punish companies that misuse personal data or deployed copyrighted content without permission. The message: innovation without accountability is recklessness.

-

Additionally, new legislation proposes AI test sandboxes in financial services—offering room to innovate within guardrails rather than stifling progress outright.

These moves suggest a clear recognition: AI can’t be regulated by ignoring divergence between states or by letting tech giants write the rules alone.

OpenAI Regulation — The Spotlight on Big AI

When Sam Altman talks, lawmakers listen. Initially, he called for AI oversight—but by mid-2025, his tone shifted to “business as usual.” That flip-flop captured Washington’s attention. Meanwhile, the FTC is already investigating OpenAI for possible harms linked to its outputs.

In Europe, the AI Act demands that developers explain their model architecture, training sources, and compute use. U.S. lawmakers, noticing the gaps, are now considering similar transparency mandates.

What This Means for You — Everyday Impacts

AI regulation isn’t just a tech or legal issue—it impacts your daily life:

-

You’ll see labels on AI-generated content—from news articles to image edits—making it clear what’s real and what isn’t.

-

High-risk tools, like job-screening AI or medical diagnostics, will be safer through testing and oversight.

-

Privacy protections for your data will tighten, so your personal info has fewer paths into unknown systems.

-

Consumer safety nets will grow: from deepfake defenses to tamper-proof accuracy in finance or hiring.

-

Reliability may come at a cost: expect some slowdown or higher prices as firms adapt, but a net gain in trust.

Depending on how federal and state policies align—or don’t—the ripples of AI law could be felt unevenly across the country.

Conclusion — Striking the Balance for AI’s Future

Here’s where we stand:

-

Europe has laid down the law with its proactive, risk-based model.

-

The U.S. sees concern across both parties, and debates have shifted from whether to regulate to how.

-

Key questions remain: Will federal regulation rise fast enough to avoid a patchwork landscape? Can oversight protect consumers without crushing innovation?

AI regulation isn’t just coming—it’s here. And the big question going forward is whether America can lead with a balanced strategy: one that nurtures innovation while protecting rights, safety, and trust.

This debate will define the next chapter of AI—and your everyday experience with it

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0